Every year program directors sign off on their final year residents as competent to practice independently in their specialty. Yet, how confident are they that each of these doctors is ready? Prior to the introduction of competency based medical education in 2001, residents completed their required training time and graduated with their final hurdle to successfully pass a knowledge-based, multiple choice specialty exam. However, the public and those who pay for medical care (employers and insurers) wanted greater accountability in assuring the competence of graduating physicians. In 2001 the US accrediting body for residency programs, the Accreditation Council for Graduate Medical Education (ACGME), established six general competencies that all graduates needed to demonstrate the ability to perform. These include patient care, medical knowledge, interpersonal and communication skills, professionalism, systems based practice (the ability to use the resources of the health care system) and practice based learning and improvement (the ability to self-monitor and improve the quality of care a doctor provides). Program directors now assumed the responsibility to develop assessment methods that accurately discriminate those residents who achieve the requisite competencies from those who do not. I will provide an overview of the assessment methods that are currently in use. Further detailed resources are provided below and you are encouraged to look them up.

Two concepts are important to appreciate about the methods used for assessment. It must first be valid, namely that what is measured truly reflects the trainee’s ability. An exam on the minutia of biochemical processes does not reflect how well the resident manages a patient. Second, a method should be reliable so that if repeated multiple times with the same resident the results are consistent. The good news is that several different assessment methods exist that have proven validity and reliability.

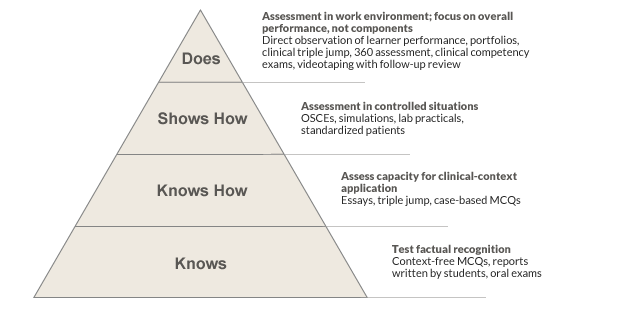

Assessment of clinical competence can be visualized with a model developed by George Miller using a figure of a pyramid (see figure 1). At the base of the pyramid is a foundation consisting of factual knowledge. This knowledge is essential but not sufficient to becoming a competent clinician and is usually tested early in medical school with multiple choice exams. More advanced skills assessment is represented further up the pyramid. Here, the trainee must demonstrate that she knows how something is done. She may be asked to describe how to assess a patient presenting with pleuritic chest pain and hypoxia. Oral exams or case based questions can effectively assess this level. Further up the pyramid, the trainee must show how something is done. At this level she must demonstrate her physical exam or history taking skills on a simulated patient (an actor trained to portray a patient) or for procedural skills, a manikin while observed by a faculty member. At the very top of the pyramid is where the trainee demonstrates she can actually perform clinical care effectively directly with a patient. Directly observing a trainee as she examines or interviews a patient provides a window into the care she actually provides.

No single level of the pyramid can or should be the only aspect evaluated. Time, resources, available faculty and convenience should be considered. Programs are encouraged to use multiple methods of assessment. Consider each method a snapshot and collecting multiple snapshots leads to a 3-D image.

Global rating is the most common and universal method used. The faculty complete a set of questions on an evaluation form, each question addressing a specific competency and rated using a scale (1-5, 1-9, etc,) with the higher number reflecting greater competence. Global rating forms are time efficient and flexible to the setting of assessment. In addition, they can be used to get the perspective of different members of the health team (called 360 assessment) including peers, nurses and even patients. Reliability can be a limitation because faculty often have different expectations of competence - some are hard graders and others easy graders. It is also intuition based, leading to the problem of halo effect, where all of the competencies are rated the same based on impressions of one competency. So a resident who presents well on rounds is given high ratings for clinical skills that may not have been observed. Global rating is nevertheless, the most commonly used method of evaluation and has some validity. Faculty development and explicit evaluation forms can improve reliability.

Direct observation by faculty provides an opportunity to watch a resident performing a specific task (exam, history, procedures) and then complete a checklist on what is observed. It is also a great opportunity for providing feedback. The form commonly used in resident education is the clinical evaluation exercise or CEX. While faculty cite time as the main reason for not performing CEXs (even finding 10 minutes to observe a resident is challenging), they often become converts when actually seeing a trainee perform an exam incorrectly, miss a finding or respond poorly to a patients query. The literature suggests 8-10 observations are necessary for valid assessment. Review of videotaped interactions with patients can be just as valuable. Faculty development on observing effectively and building observation into busy schedules can make direct observation an effective means to assess what a resident “does” on Millers pyramid.

Chart stimulated recall (CSR) and chart audits use a residents charting of a patient encounter as the basis for assessing resident performance in practice clinical decision making. For the CSR, the faculty member together with the resident, reviews a progress note written by that resident for a clinical encounter and queries her thought processes for her clinical decisions. It is an excellent way to probe a resident’s medical knowledge and clinical reasoning but limited by time (30 minutes typically per encounter) and the need for faculty training. Chart audit consists of a review of trainee clinical documentation and record keeping and is particularly useful as a quality measure of the patient care a resident provides. Audits typically measure health maintenance compliance and HbA1c and blood pressure levels, data that is often easy to get through electronic health records. It can also look at the quality of documentation including identifying “cut and paste” in electronic medical records that impair document accuracy.

Simulation uses manikins and simulated patients (trained actors) and can be useful for the "shows how" ability of the trainee. It can be expensive with significant upfront costs however is extremely valuable for learning and feedback. Multiple choice exams can help trainees in preparation for certification exams though mostly useful for assessing knowledge base, the lowest part of Millers pyramid.

One final comment is on the value of faculty development. Useful assessment requires skill which can be developed with a little training. Faculty will benefit from a review of the assessment form and agreed upon expectations of residents at different points in their training. Narrative comments should always be encouraged for any assessment method because the richness they provide tells much more about a trainee’s ability than numbers and scales. Ultimately, the goal is to be confident we are graduating doctors we are comfortable recommending to family and friends. Incorporating different assessment methods and preparing faculty to effectively use them will go a long way towards achieving this goal.

References

- Ansari A, Ali SK, Donnon T. The Construct and Criterion Validity of the Mini-CEX: A Meta-Analysis of the Published Research. Acad Med. 2013;88(3):413-20.

- Epstein RM. Assessment in Medical Education. N Engl J Med. 2007;356:387-396

- Holmboe ES, Hawkins RE, editors. Practical Guide to the Evaluation of Clinical Competence. Philadelphia, PA. Mosby Elsevier. 2008.

- Miller GE. The assessment of clinical skills/ competence/ performance. Acad Med. 1990;65:s63-s67.

- Raj JM, Thorn PM. A Faculty Development Program to Reduce Rater Error on Milestone-Based Assessments. Journal of Graduate Medical Education. 2014;6(4):680-685.

- Scalese RJ, Obeso VT, Issenberg SB. Simulation Technology for Skills Training and Competency Assessment in Medical Education. J Gen Intern Med. 2008;23(Suppl 1):46-9

- Williams RG, Dunnington GL, Klamen DL. Forecasting Residents’ Performance-Partly Cloudy. Acad Med. 2005;80:415-422.

Written for March 2015 by

Asher A. Tulsky, MD

Associate Professor of Medicine,

Division of General Internal Medicine,

University of Pittsburgh,

Pittsburgh, Pennsylvania,

USA